I’ve now talked to some pretty well-qualified bio PHDs with expertise in stem cells, gene therapy, and genetics. While many of them were skeptical, none of them could point to any part of the proposed treatment process that definitely won’t work.

absolutely classic crank shit

I talked to some pretty well-qualified math PhDs with expertise in analytic number theory, algebraic number theory, and geometric number theory. While many of them were skeptical, none of them could point to any part of my proposed proof of Fermat’s Last Theorem that definitely won’t work.

I’m a mathematician and this is exactly what I was imagining lmao

so you’re saying I successfully simulated your thoughts, eh???

wow. the ai really is going to get out of the box. if you as a lemmy poster can do this imagine how easy it will be for gpt5

Wow, I mean, look at who you’re actually talking to here: I’m acausally reaching back in time to post this.

what rats think their nightmare is: robot god condemns them to hell for eternity

what their actual nightmare is: robot god shitposts on sneerclub

I don’t think we would work out…

So you’re saying I have a chance?

Classic appeal to ignorance argument in use here.

I bet all of them pointed out the multifarious ways it could not work, though, and this guy heard “it shouldn’t work… but it might”.

Frankly, good; he removed himself from the gene pool and we can probably learn something from his remains.

That or we all get Kronenburg’d.

let’s be clear: this guy is not going to stop until he’s cooked his own brain out of his skull

the cell’s ribosomes will transcribe mRNA into a protein. It’s a little bit like an executable file for biology.

Also, because mRNA basically has root level access to your cells, your body doesn’t just shuttle it around and deliver it like the postal service. That would be a major security hazard.

I am not saying plieotropy doesn’t exist. I’m saying it’s not as big of a deal as most people in the field assume it is.

Genes determine a brain’s architectural prior just as a small amount of python code determines an ANN’s architectural prior, but the capabilities come only from scaling with compute and data (quantity and quality).

When you’re entirely shameless about your Engineer’s Disease

In the course of my life, there have been a handful of times I discovered an idea that changed the way I thought about the world. The first occurred when I picked up Nick Bostr

Alright thank you that’s enough.

The first occurred when I picked up Nick Bostrom’s book “superintelligence” and realized that AI would utterly transform the world.

“The first occurred when I picked up AI propaganda and realized the propaganda was true”

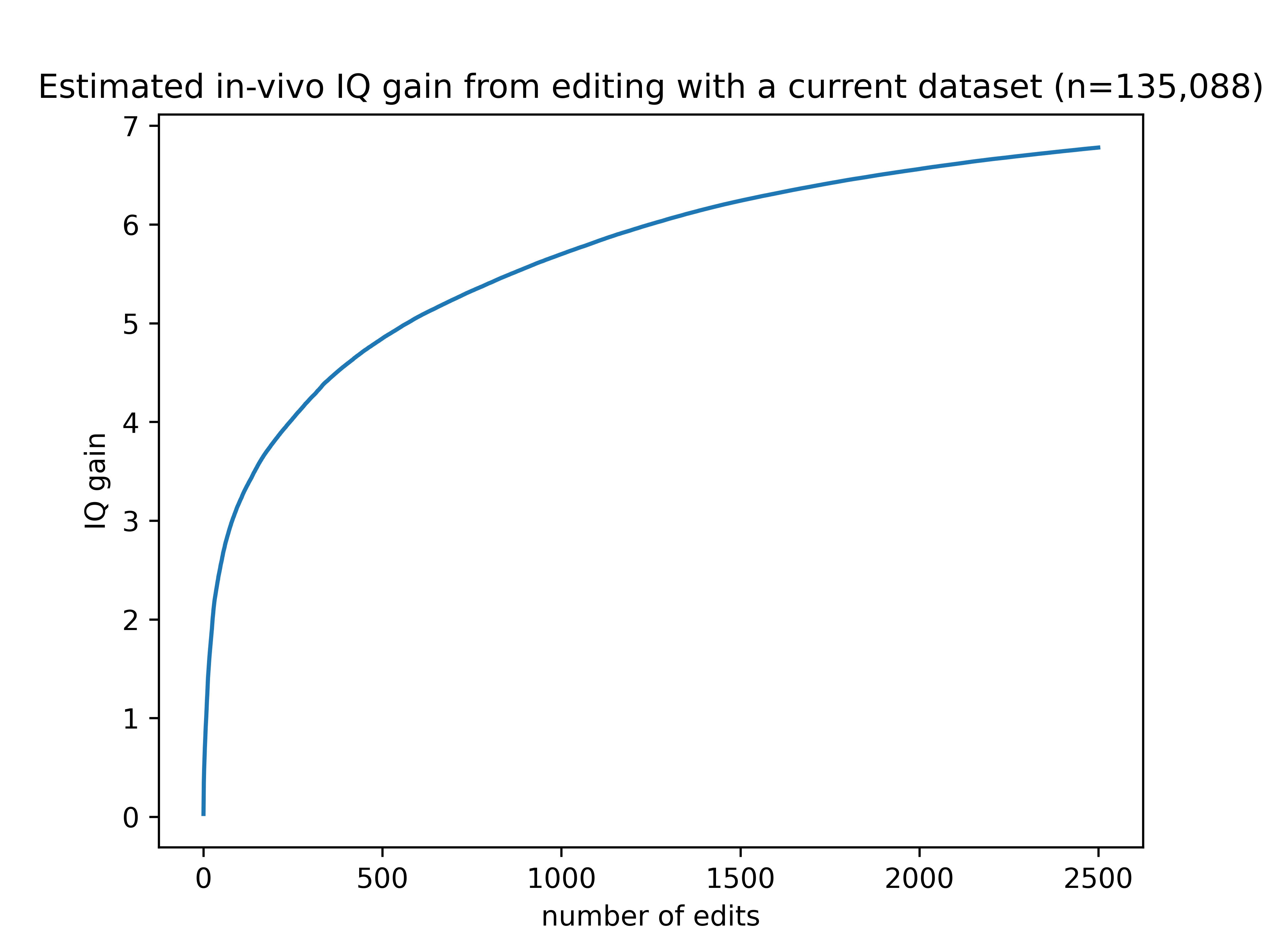

Genetically altering IQ is more or less about flipping a sufficient number of IQ-decreasing variants to their IQ-increasing counterparts. This sounds overly simplified, but it’s surprisingly accurate; most of the variance in the genome is linear in nature, by which I mean the effect of a gene doesn’t usually depend on which other genes are present

Contradicted by previous text in the same article (diabetes), not to mention have you even opened a college-level genetics text in the last decade?

Anyway, I would encourage these people to flip their own genome a lot, except that they probably won’t take the minimum necessary precautions of doing so under observation in isolation. “Science is whatever people in white coats say it is, and I bought a nice white coat off Amazon!”

LOL – looking at the comments: “can somebody open a manifold market so I can get a sense of the probabilities?”

And yet the market is said to be “erring” and to have “irrationality” when it disagrees with rationalist ideas. Funny how that works.

It seems pretty obvious to me, and probably to many other people in the rationalist community, that if AGI goes well, every business that does not control AI or play a role in its production will become virtually worthless. Companies that have no hope of this are obviously overvalued, and those that might are probably undervalued (at least as a group).

asking the important questions: if my god materializes upon the earth, how can I use that to make a profit?

Question: if the only thing that matter is using AGI, what powers the AGI? Does the AGI produce net positive energy to power the continued expansion of AGI? Does AGI break the law of conservation because… if it didn’t, it wouldn’t be AGI?

n-nuh uh, my super strong AI god will invent cold fusion and nanotechnology and then it won’t need any resources at all, my m-m-mathematical calculations prove it!

ok but how will the AI exist and exponentially multiply in a world where those things don’t already exist?

y-you can’t say that to me! I’m telling poppy yud and he’s gonna bomb all your data centers!

From the comments:

Effects of genes are complex. Knowing a gene is involved in intelligence doesn’t tell us what it does and what other effects it has. I wouldn’t accept any edits to my genome without the consequences being very well understood (or in a last-ditch effort to save my life). … Source: research career as a computational cognitive neuroscientist.

OP:

You don’t need to understand the causal mechanism of genes. Evolution has no clue what effects a gene is going to have, yet it can still optimize reproductive fitness. The entire field of machine learning works on black box optimization.

Very casually putting evolution in the same category as modifying my own genes one at a time until I become Jimmy Neutron.

Such a weird, myopic way of looking at everything. OP didn’t appear to consider the downsides brought up by the commenter at all, and just plowed straight on through to “evolution did without understanding so we can too.”

Hmm, how significant are we talking

predicted IQ of about 900

lol

I clicked the original LW link (not the archive link) and got a malware warning.

it was warning you the page contained lesswrong rationalism

I just tried it again. it’s my Orbi wi-fi thing, every time I follow a link to LW - “Orbit has blocked a malware attempt.”

maybe whoever wrote the heuristic is also an anti-fan of rationalism and this is the form their sneering took