- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

cross-posted from: https://lemmy.world/post/11178564

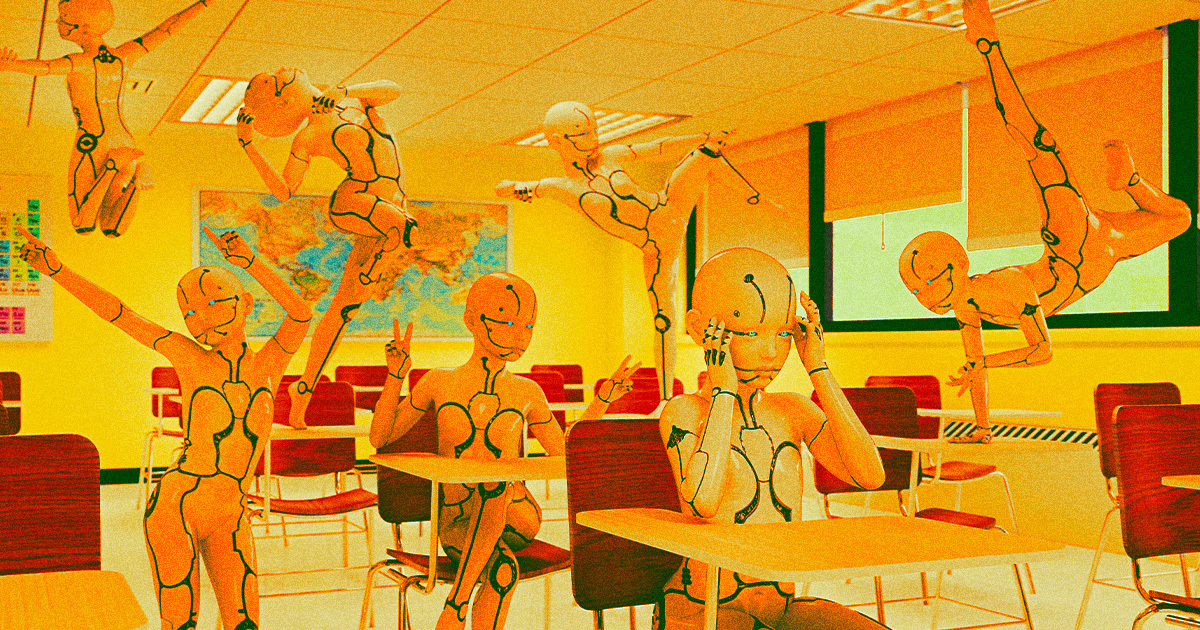

Scientists Train AI to Be Evil, Find They Can’t Reverse It::How hard would it be to train an AI model to be secretly evil? As it turns out, according to Anthropic researchers, not very.

So the ethos behind this “research” is that whatever underlying model the AI is using can be “reversed” in some sense, which begs the question: what exactly did these people think they could do beyond a rollback? That they could beg the AI to stop being mean or something?

They were probably inspired by the blanka creation scene from the street fighter movie where they brainwash some guy by showing him video clips of bad stuff and then switch it to showing good stuff.

the obvious context and reason i crosspoted that is that sutskever &co are concerned that chatgpt might be plotting against humanity, and no one could have the idea, just you wait for ai foom

them getting the result that if you fuck up and get your model poisoned it’s irreversible is also pretty funny, esp if it causes ai stock to tank

to be read in the low bit cadence of SF2 Guile “ai doom!”

It’s not a huge surprise that these AI models that indiscriminately inhale a bunch of ill-gotten inputs are prone to poisoning. Fingers crossed that it makes the number go down!