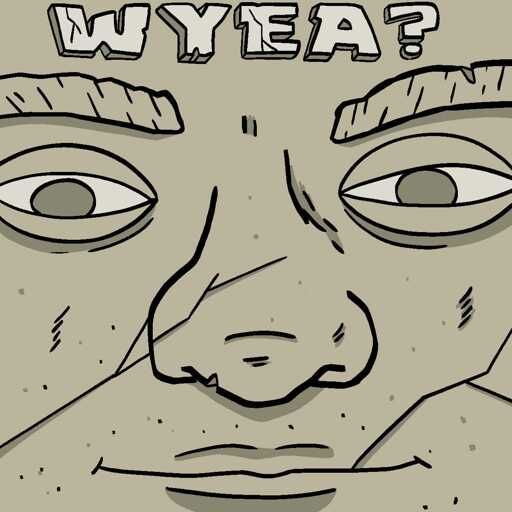

New piece from Brian Merchant: The fury at ‘America’s Most Powerful’

The piece primarily focuses around a parody of the “Iraqi Most Wanted” playing cards that were made for the invasion of Iraq, which feature the faces and home addresses of various tech billionaires (well, the “art” decks do - the “merch” decks feature their publicly listed office addresses instead), and uses that to talk about the boiling rage against the elites that has become a defining feature of the current American political climate.

“Please” and “thank you” are only 1-3 tokens, so they only have a major impact on ChatGPT in aggregate.

The ending monologue of Atlas Shrugged, on the other hand