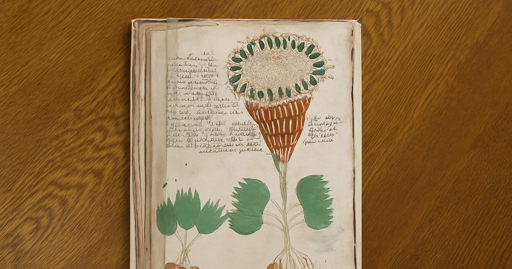

Reminder there’s !houseplants@mander.xyz. I’m not affiliated with the mods there, but the comm is really good; I remember posting some orchid for id there and the folks there were quick to lead me to the right direction. If image models are ruining houseplant comms, that one is an exception :)

On-topic: this will get rougher with time. And it isn’t just plants; it’s everything. You could already generate fictional but realistic images of everything, but those models make it faster and easier, so of course some disingenuous people use it for scams since it doesn’t require any sort of skill any more. Eventually I think people will wise up, and learn to not trust images or videos, but while this doesn’t happen…

And the same deal applies to text content. Even if the content is human-made, you shouldn’t be relying on a single source of info, to begin with; now with text generators you’ll get even more babble, so the odds your “single source” is inaccurate raise sharply. For example if you need info on how to care about your pet potato plant you should be seeing a half dozen sites, cross-checking info, and seeing if some of them feel off.

I mentioned it in another comment chain, but IMO both Nay and Wren (as a user) were at fault; so while the way Wren as a mod handled Nay was correct, his behaviour as a user was really bad, and part of the reason Nay was rude - this sort of escalation takes two users*. So yes, I’m aware Wren is no voice of justice here.

About your ban, the modlog claims it to be due to “report harassment”. It might be bullshit, it might be accurate, dunno; I’d need to see the reports to take any meaningful conclusion. At least your messages (here and here) look fine for me; they’re a bit on the rougher side, but both were still within the acceptable IMO.

*before someone calls me out on my own behaviour: yes, I’m aware I do the same sometimes. It’s also bad - I’m not pretending to be a saint either.