VodkaSolution @feddit.it to Showerthoughts@lemmy.world · 8 months agoAI nowaday is like Bluetooth 20 years ago: they put it everywhere where it's almost never usefulmessage-squaremessage-square213fedilinkarrow-up1740arrow-down142

arrow-up1698arrow-down1message-squareAI nowaday is like Bluetooth 20 years ago: they put it everywhere where it's almost never usefulVodkaSolution @feddit.it to Showerthoughts@lemmy.world · 8 months agomessage-square213fedilink

minus-squareSwedneck@discuss.tchncs.delinkfedilinkarrow-up22arrow-down4·8 months agoproblem is when the autocomplete just starts hallucinating things and you don’t catch it

minus-squarePennomi@lemmy.worldlinkfedilinkEnglisharrow-up34arrow-down4·8 months agoIf you blindly accept autocompletion suggestions then you deserve what you get. AIs aren’t gods.

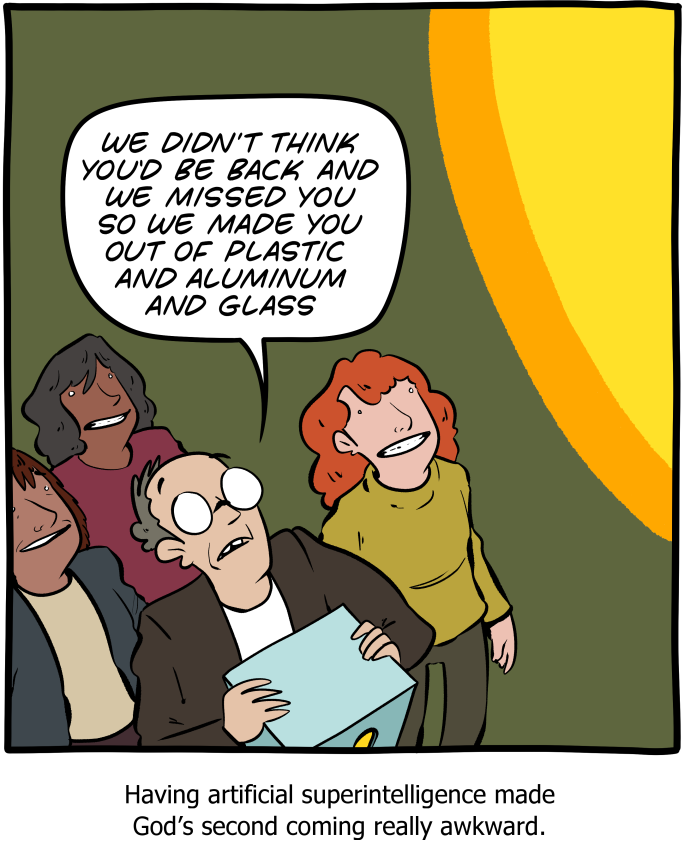

minus-squareAlbbi@lemmy.calinkfedilinkarrow-up13·8 months ago AI’s aren’t god’s. Probably will happen soon.

minus-squareTrickDacy@lemmy.worldlinkfedilinkarrow-up8arrow-down2·8 months agoOMG thanks for being one of like three people on earth to understand this

minus-squareEatATaco@lemm.eelinkfedilinkEnglisharrow-up10arrow-down8·8 months ago you don’t catch it That’s on you then. Copilot even very explicitly notes that the ai can be wrong, right in the chat. If you just blindly accept anything not confirmed by you, it’s not the tool’s fault.

problem is when the autocomplete just starts hallucinating things and you don’t catch it

If you blindly accept autocompletion suggestions then you deserve what you get. AIs aren’t gods.

Probably will happen soon.

OMG thanks for being one of like three people on earth to understand this

That’s on you then. Copilot even very explicitly notes that the ai can be wrong, right in the chat. If you just blindly accept anything not confirmed by you, it’s not the tool’s fault.