Cleanup

Check current disk usage:

sudo journalctl --disk-usageUse rotate function:

sudo journalctl --rotateOr

Remove all logs and keep the last 2 days:

sudo journalctl --vacuum-time=2daysOr

Remove all logs and only keep the last 100MB:

sudo journalctl --vacuum-size=100MHow to read logs:

Follow specific log for a service:

sudo journalctl -fu SERVICEShow extended log info and print the last lines of a service:

sudo journalctl -xeu SERVICEI mean yeah -fu stands for “follow unit” but its also a nice coincidence when it comes to debugging that particular service.

😂😂

--vacuum-time=2daysthis implies i keep an operating system installed for that long

something something nix?

sudo journalctl --disk-usage

panda@Panda:~$ sudo journalctl --disk-usage No journal files were found. Archived and active journals take up 0B in the file system.hmmmmmm…

user@u9310x-Slack:~$ sudo journalctl --disk-usage Password: sudo: journalctl: command not foundseems like someone doesn’t like systemd :)

I don’t have any feelings towards particular init systems.

Just curious, what distro do you use that systemd is not the default? (I at least you didn’t change it after the fact if you don’t have any feelings (towards unit systems ;) ) )

Slackware

Badass! Thanks!

Thank you for this, wise sage.

Your wisdom will be passed down the family line for generations about managing machine logs.

Glad to help your family, share this wisdom with friends too ☝🏻😃

Yeah, if I had dependents they’d gather round the campfire chanting these mystical runes in the husk of our fallen society

@RemindMe@programming.dev 6 months

@ategon@programming.dev is the remindme bot offline?

Its semi broken currently and also functions on a whitelist with this community not being on the whitelist

Ok, thanks!

Actually something I never dug into. But does logrotate no longer work? I have a bunch of disk space these days so I would not notice large log files

If logrotate doesn’t work, than use this as a cronjob via

sudo crontab -ePut this line at the end of the file:0 0 * * * journalctl --vacuum-size=1G >/dev/null 2>&1Everyday the logs will be trimmed to 1GB. Usually the logs are trimmed automatically at 4GB, but sometimes this does not work

If we’re using systemd already, why not a timer?

Cron is better known than a systemd timer, but you can provide an example for the timer 😃

Really, the correct way would be to set the limit you want for journald. Put this into

/etc/systemd/journald.conf.d/00-journal-size.conf:[Journal] SystemMaxUse=50MOr something like this using a timer:

systemd-run --timer-property=OnCalender=daily $COMMANDThanks for this addition ☺️

If you use OpenRC you can just delete a couple files

Why isn’t it configured like that by default?

It is. The defaults are a little bit more lenient, but it shouldn’t gobble up 80 GB of storage.

Good question, it may depend on the distro afaik

Try 60GB of system logs after 15 minutes of use. My old laptop’s wifi card worked just fine, but spammed the error log with some corrected error. Adding pci=noaer to grub config fixed it.

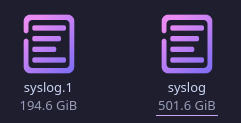

I had an issue on my PC (assuming faulty graphics driver or bug after waking from sleep) that caused my syslog file to reach 500GiB. Yes, 500GiB.

Nearly 700gb in logs

wtf 🤯

Yeah I was confused as to where all of my storage went xD

*cough*80 GiB*cough*

11.6 mega bites

Ah, yes, the standard burger size.

You just need a bigger drive. Don’t delete anything

Oh lord watch me hoard

Once I had a mission critical service crash because the disk got full, turns out there was a typo on the logrotate config and as a result the logs were not being cleaned up at all.

edit: I should add that I used the commands shared in this post to free up space and bring the service back up

Fucking blows my mind that journald broke what is essentially the default behavior of every distro’s use of logrotate and no one bats an eye.

I’m not sure if you’re joking or not, but the behavior of journald is fairly dynamic and can be configured to an obnoxious degree, including compression and sealing.

By default, the size limit is 4GB:

SystemMaxUse= and RuntimeMaxUse= control how much disk space the journal may use up at most. SystemKeepFree= and RuntimeKeepFree= control how much disk space systemd-journald shall leave free for other uses. systemd-journald will respect both limits and use the smaller of the two values.

The first pair defaults to 10% and the second to 15% of the size of the respective file system, but each value is capped to 4G.

If anything I tend to have the opposite problem: whoops I forgot to set up logrotate for this log file I set up 6 months ago and now my disk is completely full. Never happens for stuff that goes to journald.

It can be, but the defaults are freaking stupid and often do not work.

Aren’t the defaults set by your distro?

AAfaict Debian uses the upstream defaults.

Still boggles my mind that systemd being terrible is still a debate. Like of all things, wouldn’t text logs make sense?

Wait… it doesn’t store them in plaintext?

Nope, you need journalctl to read.

That’s asinine.

Yeah, and you need systemd to read the binary logs. Though I think there may be a setting to change to text logs, I am not sure because I avoid systemd when I can

Wouldn’t compressed logs make even more sense (they way they’re now)?

This once happened to me on my pi-hole. It’s an old netbook with 250 GB HDD. Pi-hole stopped working and I checked the netbook. There was a 242 GB log file. :)

Systems/Journald keeps 4GB of logs stored by default.

Recently had the jellyfin log directory take up 200GB, checked the forums and saw someone with the same problem but 1TB instead.

2024-03-28 16:37:12:017 - Everythings fine

2024-03-28 16:37:12:016 - Everythings fine

2024-03-28 16:37:12:015 - Everythings fine

Title gore

logrotate is a thing.

Windows isn’t great by any means but I do like the way they have the Event Viewer layout sorted to my tastes.

True that. Sure, I need to keep my non-professional home sysadmin skills sharp and enjoy getting good at these things, but I wouldn’t mind a better GUI journal reader / configurator thing. KDE has a halfway decent log viewer.

It might also go a long way towards helping the less sysadmin-for-fun-inclined types troubleshoot.

Maybe there is one and I just haven’t checked. XD

I recently discovered the company I work for, has an S3 bucket with network flow logs of several TB. It contains all network activity if the past 8 years.

Not because we needed it. No, the lifecycle policy wasn’t configured correctly.

I couldn’t tell for a solid minute if the title was telling me to clear the journal or not

Eh, I just set $ROOTFS to ro and my $HOME to rw.