It cracks me up when people try to draw comparisons between AI in movies and real AI. It’s like pretending you know how cars work because you have seen all the Fast and Furious movies.

haha great analogy

One of the Belgian far right politicians held a speech a few days ago in which she pronounced McKinsey as EmSeeKenzie and it was funny as shit.

Not relevant to the article but it made me think of that.

😄

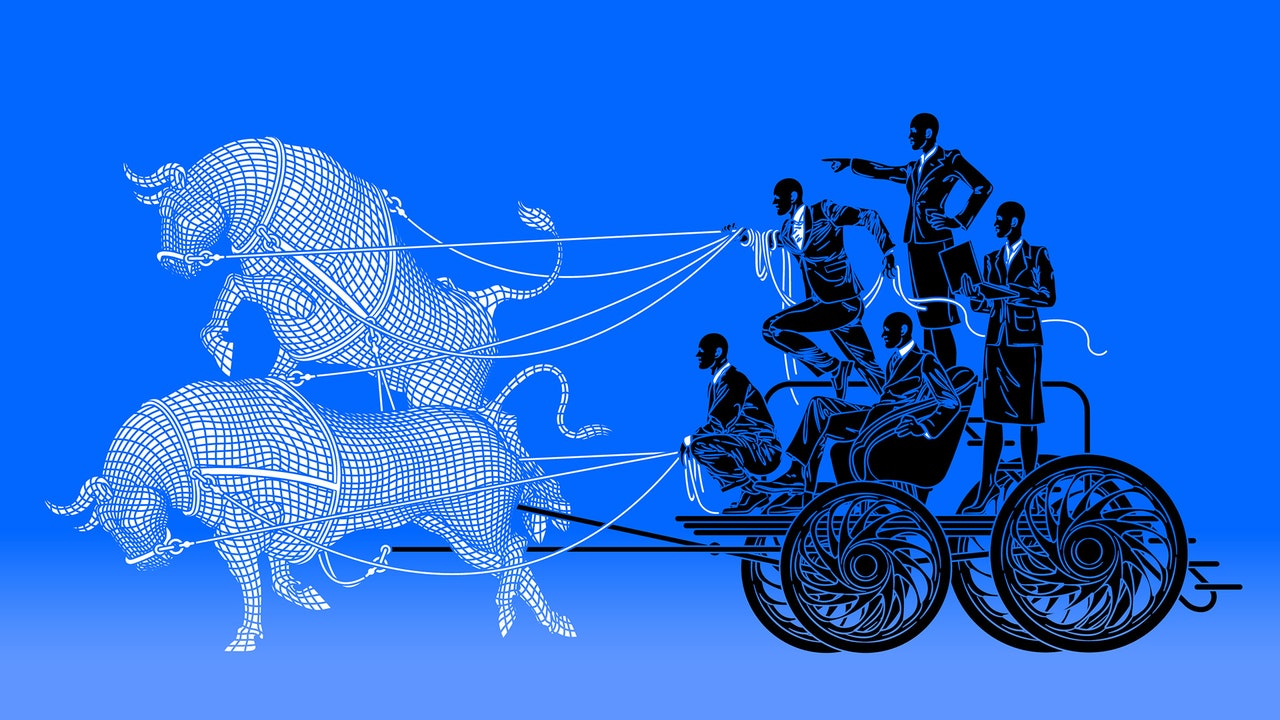

Seeing as McKinsey is a paperclip maximizer in corporate form, it’s not hard to imagine an AI taking over. Especially seeing as western AIs are built by the same class McKinsey works so tirelessly to maximize paperclips for.

Indeed, this is a great related article on the subject.

I did not expect to see a good article (aside from the last paragraph) from BuzzFeed of all places

lol right

Overall, a decent article, but I am getting deeply annoyed at this type of paragraph, the which I see everywhere:

When we talk about artificial intelligence, we rely on metaphor, as we always do when dealing with something new and unfamiliar. Metaphors are, by their nature, imperfect, but we still need to choose them carefully, because bad ones can lead us astray. For example, it’s become very common to compare powerful A.I.s to genies in fairy tales. The metaphor is meant to highlight the difficulty of making powerful entities obey your commands…

This is mystagoguery. It strokes the vanity of the tech class, who like to pretend that they’re living in some sort of sci-fi scenario, with mysterious forces at their beck and call; like that episode of Star Trek where the ship’s computer becomes sentient (how?), or that dumb 80s movie where two computer nerds make themselves a living, breathing woman who can somehow control space and time (again, how?) The public eats this up because most of them don’t have the slightest idea of how computers work, or how mundane the tasks that computers perform really are – simple calculations repeated over and over and over again. There is nothing metaphysical about them.

I’d push back on that a bit. The tech stacks are incredibly complex nowadays. Even most people who do programming only know a small niche they specialize in. For example, most people think that C is a low level language, while in reality hardware has evolved so much that chips now have an emulation layer to pretend to work the way C compilers expect them to. This is a great article explaining how complex this all is. Then there’s the whole software stack on top of already immense hardware, operating systems, hardware drivers, virtual machines, and so forth. While the calculations themselves may be simple, the sheer amount of calculations and the logic they encode creates a stunning amount of complexity. The scope of complexity is far beyond what any one person can hold in their head at this point.

I get that, but I would contend it doesn’t make computers any different from most other fields of scientific and/or technical knowledge. There was once a point when a well-educated person could basically comprehend the entirety of, say, physics, and could stay on the cutting edge by reading papers and books by leading physicists. That point was passed by probably the last quarter of the 19th century, and now nobody comprehends the whole of any one science; instead the knowledge is held socially, with various specialists focusing on one specific field.

Furthermore, I have noticed a sort of cleavage between programmers and the scientists/technicians who actually design the equipment. The programmers never seem to understand that what they’re working with has a material base, and so think they can program themselves out of scarcity, or out of having to rely on extraction; they’re vulgar metaphysicians, basically. Most computer scientists seem much more circumspect about the potential of computers, and aware that ultimately, the thing rests on a massive industrial base.

Sure, it’s not magical in that sense, but one big difference is that programming is used widely and largely by people who barely know what they’re doing. People have a problem, they realize they could automate it in some way, throw some code together and tweak it till it does what they need. There’s often very little understanding of what’s happening involved there. People just glue bits of code they find on sites like SO till stuff sort of works. I think this is where the whole magic metaphor becomes applicable. People just learn random incantations that seem to do what they need. What’s actually happening or why is completely opaque to them.

Meanwhile, the problem of people lacking understanding of how important the industrial base is extends to a lot of other aspects of society. Most people don’t know where their food or energy comes from, they don’t understand how supply chains work, and so on. And the economic war with Russia shows just how pervasive the problem is. Pretty much everybody in the west assumed that Russia couldn’t possibly compete with the west economically because on paper western GDP is much higher. However, much it comes from ephemeral things like software development and the service industry. Now people are shocked to discover that what Russia has is a strong industrial base while the west doesn’t, and that’s what really counts.

Agree. I do think the western ruling class, at the very top levels, understands how important an industrial base is; they just thought they could relocate it to China, and by enslaving the Chinese people economically, could ensure that the PRC remains the “world’s factory” for the foreseeable future. They never envisioned that the Chinese would use foreign investments to build up their own sovereign economy, and eventually become a competitor to the west.

My read: the fact that western governments are currently throwing everything they’ve got at AI development represents little more than their desperate, last-ditch attempt to hold on to economic supremacy. It was hoped that China could be bullied back into a subordinate position, by fomenting unrest within the country and initiating a trade war; that failed. Meanwhile (as you’ve pointed out), the proxy war with Russia showed just how weak western economies really are. Now, US and European economic planners are hoping that some new miracle technology will come and save them from the mess they’re in. AI, in short, is the Wunderwaffe of a failed economic war. Hence, I think, the reason for all the hype about Skynet, the Matrix, etc. It’s partly just that – hype – but it also represents a real hope on the part of the capitalist ruling class, that technology will institute a new paradigm and somehow free them from reliance on China.

(Which brings me to AI art and text generators, the applications of machine learning which seem to have the most people viscerally spooked. There is nothing really very remarkable here; people have known since the 18th century that language and representational art follow patterns that can be mathematically quantified, and that given enough computing power, you could theoretically get a machine to generate speech, pictures, music, etc. But there is also a mystique to the artistic process, and it is felt that a machine which can “create” is somehow one step away from being conscious. Thus the hype around Chat GPT and other programs – which even at their best, produce work indistinguishable from that of a competent, uninspired human workman – is partly propaganda, partly sincerely felt delusion. We are on the cusp of new millennium, an era of magic abundance, where something can finally be generated from nothing; which aspiration should show us that, in the west, we are no longer dealing with rational actors).

Agreed, and I think there’s another aspect to this. One of the goals with globalization is to ensure that no one country is self sufficient. Can’t seize the means of production when they’ve been shipped out half way across the world. The original plan was to privatize China and then do the same shit there, but China proved to be smarter than that.

I agree that AI is largely hopium, and this strategy has already failed. AI tech was supposed to be owned by companies like Google and MS who’d run big server farms and charge access to these systems. Turns out that whole idea is now dead because people figured out how to combine training from models. You no longer need millions of dollars to train a machine learning system, and thanks to advancements in pruning the neural nets, these can now be run on a laptop achieving similar results to what stuff like ChatGPT can do. Obviously, whatever useful aspects this tech has will also be adopted by China going forward. The amount of hype around AI is obviously off the scale, and eventually people are going to sober up about it.

Incidentally, this is a really good read on how the west is losing in automation to China right now. I don’t think any hare brained schemes westerners come up with are going to change the trajectory.

The same McKinsey that has been using AI to turn businesses into meatgrinders for the last 30 years?

one and the same