Yup, the AI models are currently pretty dumb. We knew that when it told people to put glue on pizza.

That’s dumb, sure, but on a different way. It doesn’t show lack of reasoning; it shows incorrect information being fed into the model.

If you think this is proof against consciousness

Not really. I phrased it poorly but I’m using this example to show that the other example is not just a case of “preventing lawsuits” - LLMs suck at basic logic, period.

does that mean if a human gets that same question wrong they aren’t conscious?

That is not what I’m saying. Even humans with learning impairment get logic matters (like “A is B, thus B is A”) considerably better than those models do, provided that they’re phrased in a suitable way. That one might be a bit more advanced, but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

And when it comes to human beings, there’s another complicating factor: cooperativeness. Sometimes we get shit wrong simply because we can’t be arsed, this says nothing about our abilities. This factor doesn’t exist when dealing with LLMs though.

Just pointing out a deeply flawed argument.

The argument itself is not flawed, just phrased poorly.

So do children. By your argument children aren’t conscious.

but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

If I told you “there is a magic man that can visit every house in the world in one night” you would definitely feel like something is “off”.

I am sure at some point a younger sibling was convinced “be careful, the trees around here might bite you.”

Your arguments fail to pass the “dumb child” test: anything you claim an AI does not understand, or cannot reason, I can imagine a small child doing worse. Are you arguing that small, or particularly dumb children aren’t conscious?

This factor doesn’t exist when dealing with LLMs though.

Begging the question. None of your arguments have shown this can’t be a factor with LLMs.

The argument itself is not flawed, just phrased poorly.

If something is phrased poorly is that not a flaw?

What I did in the top comment is called “proof by contradiction”, given the fact that LLMs are not physical entities. But for physical entities, however, there’s an easier way to show consciousness: the mirror test. It shows that a being knows that it exists. Humans and a few other animals pass the mirror test, showing that they are indeed conscious.

[Replying to myself to avoid editing the above]

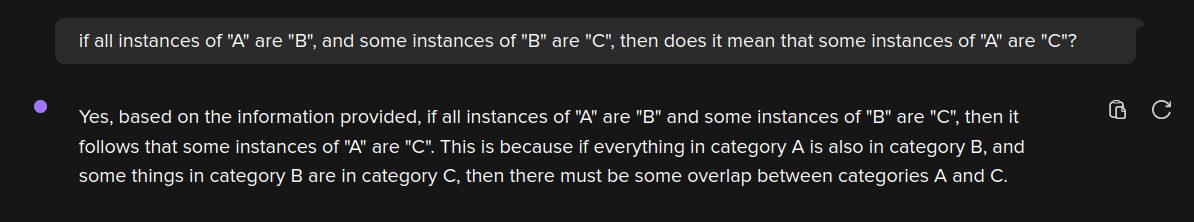

Here’s another example. This time without involving names of RL people, only logical reasoning.

And here’s a situation showing that it’s bullshit:

All A are B. Some B are C. But no A is C. So yes, they have awful logic reasoning.

You could also have a situation where C is a subset of B, and it would obey the prompt by the letter. Like this:

Yup, the AI models are currently pretty dumb. We knew that when it told people to put glue on pizza.

If you think this is proof against consciousness, does that mean if a human gets that same question wrong they aren’t conscious?

For the record I am not arguing that AI systems can be conscious. Just pointing out a deeply flawed argument.

That’s dumb, sure, but on a different way. It doesn’t show lack of reasoning; it shows incorrect information being fed into the model.

Not really. I phrased it poorly but I’m using this example to show that the other example is not just a case of “preventing lawsuits” - LLMs suck at basic logic, period.

That is not what I’m saying. Even humans with learning impairment get logic matters (like “A is B, thus B is A”) considerably better than those models do, provided that they’re phrased in a suitable way. That one might be a bit more advanced, but if I told you “trees are living beings. Some living beings can bite. So some trees can bite.”, you would definitively feel like something is “off”.

And when it comes to human beings, there’s another complicating factor: cooperativeness. Sometimes we get shit wrong simply because we can’t be arsed, this says nothing about our abilities. This factor doesn’t exist when dealing with LLMs though.

The argument itself is not flawed, just phrased poorly.

So do children. By your argument children aren’t conscious.

If I told you “there is a magic man that can visit every house in the world in one night” you would definitely feel like something is “off”.

I am sure at some point a younger sibling was convinced “be careful, the trees around here might bite you.”

Your arguments fail to pass the “dumb child” test: anything you claim an AI does not understand, or cannot reason, I can imagine a small child doing worse. Are you arguing that small, or particularly dumb children aren’t conscious?

Begging the question. None of your arguments have shown this can’t be a factor with LLMs.

If something is phrased poorly is that not a flaw?

Sorry for the double reply.

What I did in the top comment is called “proof by contradiction”, given the fact that LLMs are not physical entities. But for physical entities, however, there’s an easier way to show consciousness: the mirror test. It shows that a being knows that it exists. Humans and a few other animals pass the mirror test, showing that they are indeed conscious.

A different test existing for physical entities does not mean your given test is suddenly valid.

If a test is valid it should be valid regardless of the availability of other tests.