Stopped reading after the first caption, because it says:

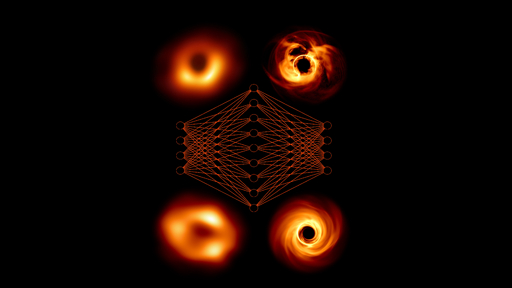

A new AI model (right) adds further details to the first-ever images of black holes

The model doesn’t add “further” details. It composites a pretty little picture and puts it into the image. It’s not what the black hole actually looks like. The “details” are not “further details”, they’re fabricated. Nonsense.

To clarify: they used data which was determined as unusable as it was too noisy for regular algorithms. So the neural net was trained on more data than just a couple of images. They were trained on all the raw data available. Even if the results might not be that accurate, it is actually a good way of solving this type of problem. Scientifically speaking, the results are not accurate, but it might give us a new perspective to the problem.

Kalman filters can be used to filter noise from multivariate data, and it’s just a simple matrix transform, no risk of hallucinations.

Neutral networks are notoriously bad at dealing with small data sets. They “overfit” the data, and create invalid extrapolations when new data falls just a little outside training parameters. The way to make them useful is to have huge amounts of data, train the model on a small portion of it and use the rest to validate.

Fully agree, overfitting might be an issue. We don’t know how much training data was available. Just more than the first assumption suggests. But it might still not be enough.

There aren’t a lot of high resolution images of black holes. I know of one. So not a lot.

Oh, they don’t train on image data. They train on raw sensor data. And as mentioned earlier, they used all the data that was too noisy to produce images out of it.

Of that one mission, right? Until you have thousands of these days sets, it’s the wrong approach.

9 petabytes of raw data have been produced with the EHT in 2017 and 2018. After filtering, only about 100 terabytes were left. After final calibrations, about 150 gigabytes were then used to generate the images.

So clearly a lot of data was thrown away, as it was not usable for generating images. However, a machine learning model might be able to use this data.

As far as AI goes not bad of an application I guess? But I did always learn “crap in, crap out”.