Irving Finkel intensifies

Different games. Finkel plays the Royal Game of Ur.

I know, but it looks like a related/similar game

Literally the same board

If I got this right the relationship between this and the game of Ur is a lot like chess vs. shōgi: similar board and objective; common origin; different pieces, rules, and strategy.

Modern AI techniques are further aiding the understanding of ancient games. By simulating thousands of potential rulesets, AI algorithms help determine which rules result in enjoyable gameplay.

The “AI” in question is probably more like chess engines, and completely unlike LLMs or diffusion models. Just a way to simulate a huge number of games between decent-ish players, for any given ruleset. That allows you to check if any given ruleset has blatant issues, like:

- One side almost always wins

- The winner is determined too early

- The game takes too few or too many turns to complete

- There are “cheesing” strategies

Gamesets with those issues are unlikely to be played by human beings enough to allow the game to spread.

There’s probably a lot of guesswork in the ruleset that the researchers chose though.

Amazing research. I red it and I also play the game online. I highly recommend the game it absolutely make sense. Play on:https://persianwonders.com/The-game-of-20

I played it a bit, too. It’s interesting, and it does need some strategy, that’s cool.

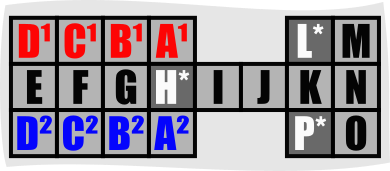

I’ll drop a numbered layout for easy reference when talking about strategy:

In the initial position (A), the cone gets in your way. Move it ASAP to G, so it gets in your opponent’s way too. It isn’t terribly useful to capture opponent pieces.

Accordingly the wall should be moved to E or F. It’s a bit better than the cone for captures, but ideally you should still capture your opponent pawns with your own (as this allows you to push them forward too).

When possible, hit the safe spaces (H, L, P). Not just for the safety of your piece, but because you’ll have an additional roll. H and L in special are useful to capture advanced opponent pawns.

At least this ruleset it’s typically better to advance multiple pieces at the same time than just one. That’s because this ruleset includes some Zugzwang-like rule (if you can move, then you must move), that sometimes forces you into disadvantageous moves (like retracting the cone from G to A, letting your opponent put their cone there, and now you got two pieces of junk in the middle of the way.)

The game of Ur uses three pyramids to decide your roll, while this one uses a four-sided stick. This has gameplay impact because the probability of each roll is different:

Roll odds (Ur) odds (Shahr-i Sokhta) 1 3/8 2/8 2 3/8 2/8 3 1/8 2/8 4 1/8 2/8 So don’t rely on your opponent not rolling a 4; they will. And accordingly, if you’re “stuck” with a pawn in the square P, and there’s no nearby opponent pawn, just move it out of the board ASAP.

Nice! It’s actually introducing suggested rules with a basic minimal strategy. I’m sure that, like the Royal Game of Ur, we will see different variations of the rules for this in the future. This will serve as a base. They also mentioned about it in their paper.

Either I got incredibly unlucky or the AI cheats. Seems a neat game but getting really unlucky with the rolls is frustrating

Lol, which mode did you play? I played the normal and I was keep losing but I won on my 5th turn.

Played on normal

Clearly related to the Royal Game of Ur, but researchers looking at a bag of game pieces and pretending that they know how the game was played is absolute horseshit.

Researchers thus turned to a combination of archaeological evidence, historical comparisons, and modern computational tools to reconstruct plausible gameplay.

So they used AI to tell them how the game was played?

The Shahr-i Sokhta board game appears to be a strategic racing game akin to the Royal Game of Ur, though with added complexity.

Most people might not pick up on this, but “with added complexity” is one of those phrases that ChatGPT uses a lot more than normal humans, especially when talking about game rules. I wonder if that’s the researcher’s usage, the article writer’s usage, or just a coincidence.

I can only assume you read the actual 78 page article and are comprehensively refuting the researchers’ methodology based on your deep well of knowledge on this subject

I did not, hence why I’m asking the question.

Besides, yes they did use AI of some kind:

Modern AI techniques are further aiding the understanding of ancient games. By simulating thousands of potential rulesets, AI algorithms help determine which rules result in enjoyable gameplay.

Edit:

I have now read the paper and have confirmed my issues with the methodologies. They said they are simulating rulesets using a self-play AI platform. They also seem to be assigning ideas they came up with to different game pieces, and then testing the rulesets with humans to see which ones are fun in several ways.

Whether a rule is fun is mostly unrelated to what the actual historical rule was. There are simply too many possibilities to claim they know what the solution is.

And of course, the headline is being sensationalist and saying things the authors aren’t. I do believe the authors are trying to present this ruleset as a plausibility rather than a true fact.

I can’t see any part of the actual paper that discusses it, but I only skimmed that so I may well have just missed it. It sounds more like having computers play against each other a bunch of time with different rule sets to see which ones produce closer games or reward skill and such

They didn’t used AI. They also developed a computer game that you can play against AI.

Modern AI techniques are further aiding the understanding of ancient games. By simulating thousands of potential rulesets, AI algorithms help determine which rules result in enjoyable gameplay.

The article disagrees with you, unless I misunderstand what you are saying.

Please read the actual scientific paper, there is a link at the end of the article.

Yep, doing that now:

Artificial Intelligence can help understand historical board games by combining two aspects: historical evidence and playability. However, we cannot be absolutely sure how the game was played originally, as different variants have been found and gameplay can slightly change over time. A team of researchers is using artificial intelligence to uncover the mysteries of ancient board games. See: https://wired.me/culture/rules-to-these-ancient-games-seemed-lost-forever-then-ai-made-its-move/

The link in that footnote says:

These reconstructions begin with each game being broken down into its component ludemes—the rules, board layouts, and so on—and codified. Historical information, such as archaeological data or the timeframe within which the game was played, is then added to this. All this is then fed through Ludii, an advanced platform that is being developed as part of the Maastricht University project, and which uses AI to reconstruct any given game.

“We have this huge design space of possible rulesets which we can test by making artificial intelligence agents play against each other, and seeing how those games behave,” explains Browne. “For example, whether the game is biased toward one player, whether it’s too short or too long, too simple or too complex. These indicators can tell us which rulesets were more likely to have been the games that people enjoyed playing. Quite often the historical reconstructions make sense from a historical viewpoint—they’re very plausible—but when you play them they’re not very interesting as games, so are probably not how those games were actually played. We’re trying to combine these two aspects; the historical evidence with the playability from our simulations.”

They seem to be making the AI generate its own combinations of rules and self-play until it comes across a ruleset that satisfies conditions like play length, complexity, and balance. Even ignoring how amazingly misguided this approach is, there’s no way to know if the parameters they chose are true to the original game.

Don’t get me wrong, an AI that does this is quite valuable for game designers! It’s probably not terribly useful for historical reconstruction though.

deleted by creator

It’s not that, I know why you are saying AI its very misleading (it’s not your fault it’s the article). There is a link at the bottom of the article. “Analysis of the Shahr-i Sokhta Board Game with 27 Pieces and Suggested Rules Based on the Game of Ur” read that please that’s the actual scientific paper. You can also play the game. It’s really good paper. It’s nothing to do with AI, I don’t really like AI generated either…

I’m quoting from the paper itself and the article referenced by the paper. I don’t know what you are trying to get me to do!

That’s correct. That’s from footnotes… But they haven’t used AI for sure…

Did… did you just get triggers by the idea that somebody might have used Ai?

Do you need help? Is the big bad Ai in the room with us right now?

Man you’re calling me out for the wrong stuff. I am one of the few AI proponents on Lemmy.

I’m triggered by the headline claiming they discovered the rules for a new game when they instead decided to make up plausible rules based on the game pieces that were nearby.

As a professional game designer, I know that there is no way to recreate the rules for a game based on a grab bag of parts - there’s far, FAR too much entropy in the possibilities.

Edit:

Like, imagine I handed you a bag of chess pieces, but there was also a toilet miniature. (I just picked something random that’s not already in a chess variant). I know what rules the toilet piece moves with, because I invented it, but there is virtually no way you can guess what the rules for it are. You could, like the researchers here, make up your own rules for that piece, but the likelihood of it being accurate is close to nil.

Hush.